Apple and UC Santa Barbara Researchers Introduce AI Image Editing Tool

MGIE: Revolutionizing Image Editing with Text-Based Instructions

NEWS AI February 8, 2024 Reading time: 2 Minute(s)

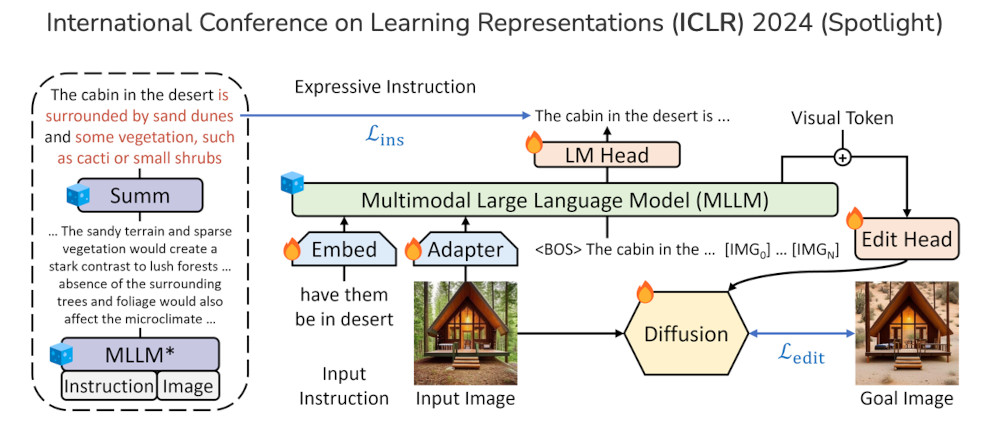

Apple, in collaboration with researchers from the University of California, Santa Barbara, has unveiled a groundbreaking advancement in the realm of image editing: MGIE. This innovative AI tool, showcased at the International Conference on Learning Representations 2024, represents a significant leap forward in the convergence of language understanding and visual manipulation capabilities.

MGIE, short for Multimodal Generative Image Editor, operates on the premise of interpreting text prompts to execute intricate image edits. Similar in concept to Google Gemini, MGIE empowers users to articulate editing commands in natural language, harnessing the power of AI to translate textual instructions into tangible visual transformations.

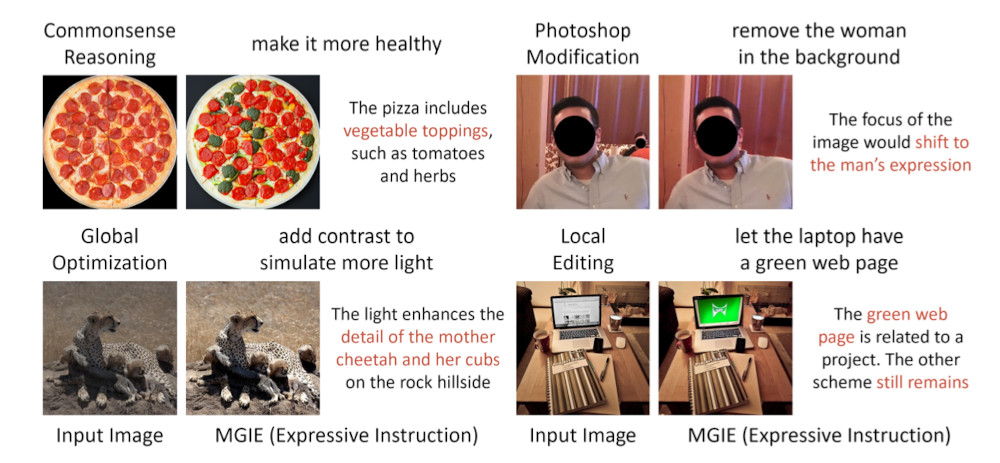

The versatility of MGIE is exemplified through its diverse range of editing functionalities. From enhancing the healthfulness of a pizza by adding nutritious toppings to seamlessly removing or adding elements from photographs, altering colors, adjusting lighting, or even transmuting landscapes, MGIE transcends conventional editing paradigms.

The core architecture of MGIE comprises a Multimodal Large Language Model (MLLM) coupled with a diffusion model. The MLLM excels in distilling intricate instructions into succinct visual directives, facilitating seamless communication between users and the AI system. Concurrently, the diffusion model orchestrates image manipulation, iteratively refining edits to align with user intent.

While the implications of MGIE extend far beyond its initial unveiling, speculation abounds regarding its integration into consumer-facing products. With Apple's ongoing endeavors in integrating generative AI features into its ecosystem, speculation arises regarding the potential incorporation of MGIE into future iterations of its flagship devices, such as the anticipated iPhone 16 series.

For enthusiasts eager to experience the transformative potential of MGIE firsthand, a demonstration is available here, offering a glimpse into the future of Apple's intuitive, text-driven image editing. A gallery of MGIE generated images is also available here.

IMAGES CREDITS: APPLE / UNIVERSITY OF CALIFORNIA, SANTA BARBARA

Apple University of California Santa Barbara AI Image Editing MGIE Multimodal Large Language Model Generative AI iPhone 16 Technology News RSMax

*Our pages may contain affiliate links. If you buy something via one of our affiliate links, Review Space may earn a commission. Thanks for your support!

CATEGORIES

COMMENTS